🔍 AI Highlights This Week

Gear up to ride the AI surge! Explore cutting-edge breakthroughs, insightful expert analysis, and actionable strategies you can apply now. The future is unfolding — join the revolution.

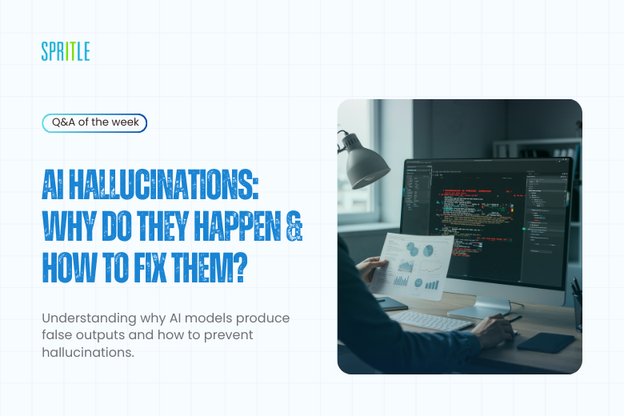

⚡ AI Spotlight: The Dark Side of AI Agents — Hallucination Isn’t Just a Bug. It’s a Dealbreaker. ⚠️

Guesswork won’t cut it—especially not in healthcare, finance, or insurance.

One wrong answer isn’t a UX glitch—it’s a legal risk waiting to explode.

🤖 LLMs don’t know facts. They predict what sounds plausible.

That’s okay for casual chats. But for mission-critical systems? It’s dangerous.

🛡️ At Spritle, we build AI Agents that know when they don’t know.

Through grounding, retrieval pipelines, and real-time fact-checks, hallucination isn’t patched — it’s stopped.

⚖️ In the U.S. market, trust, compliance, and accuracy aren’t optional—they’re mandatory.

Don’t just deploy generative AI.

Deploy it responsibly.

That’s the Spritle difference.

Hallucination in AI Agents: The Risk That Could Cost Lives and Trust

AI Shift of the Week: Stopping AI Hallucination to Protect Trust and Compliance ⚠️🤖

Hey, industry leaders! While generative AI dazzles with potential, a serious risk lurks beneath—hallucination. It’s not just a glitch; it’s a threat that could cost lives, money, and reputation.

Why It Matters

In healthcare, finance, and insurance, one wrong answer isn’t a UX flaw—it’s a legal nightmare waiting to happen.

LLMs don’t truly “know” facts—they guess what sounds plausible.

That’s fine for casual chatbots, but in mission-critical systems, it’s dangerous.

Here’s What’s Changing—Fast:

🧠 From Guesswork to Grounding

At Spritle, our AI Agents know when they don’t know. They rely on grounding data, retrieval pipelines, and continuous fact-checking.

🔍 Beyond Patchwork Fixes

Hallucination isn’t something you “patch.” You prevent it by designing trustworthy AI systems from the ground up.

🚨 But There’s a Catch

Trust, compliance, and accuracy aren’t optional—especially in the U.S. market. Deploying Gen AI without safeguards is a risk no organization should take.

The AI Agent Edge

⚙️ Fact-Checked Autonomy – AI that acts with verified, reliable knowledge.

🧠 Built for High Stakes – Protecting sensitive decisions in regulated industries.

🔒 Compliance-First Design – Privacy and security baked in, not bolted on.

The Bottom Line

Hallucination isn’t a bug—it’s a business and ethical risk.

Don’t just deploy generative AI.

Deploy it responsibly.

🔥 Pro Tip: Building AI Agents without robust safeguards is a gamble no mission-critical system can afford.

At Spritle, we build AI that’s trusted—not just powerful.

Because in AI, accuracy isn’t optional. It’s mandatory.

Stay tuned for next week’s spotlight—because in AI, trust is the new currency.