The conversation around AI has shifted. It’s no longer just about what machines can say—it’s about what they can do, safely, reliably, and ethically.

Enter Agentic AI: systems that don’t just respond to prompts, but take actions on your behalf. These agents can schedule meetings, reconcile accounting ledgers, coordinate with business systems, trigger workflows, and even interact across multiple applications autonomously.

But with autonomy comes risk. Without trust, guardrails, and compliance baked in, even the smartest Agentic AI can become a liability rather than an asset.

The Shift from Reactive to Agentic Intelligence

Traditional generative AI (like chatbots or content generators) is reactive: it waits for input and then provides an output. It helps you idea-generate, write drafts, answer questions. It rarely does anything in the world beyond surface responses.

Agentic AI, by contrast, acts. It has agency. It can:

- Access sensitive data (e.g. customer profiles, financial records, medical histories)

- Execute tasks in business systems (e.g. generate invoices, modify records, place orders)

- Adapt to real-world feedback (e.g. adjusting strategy mid-flow based on new inputs)

The difference is profound—and the stakes are higher.

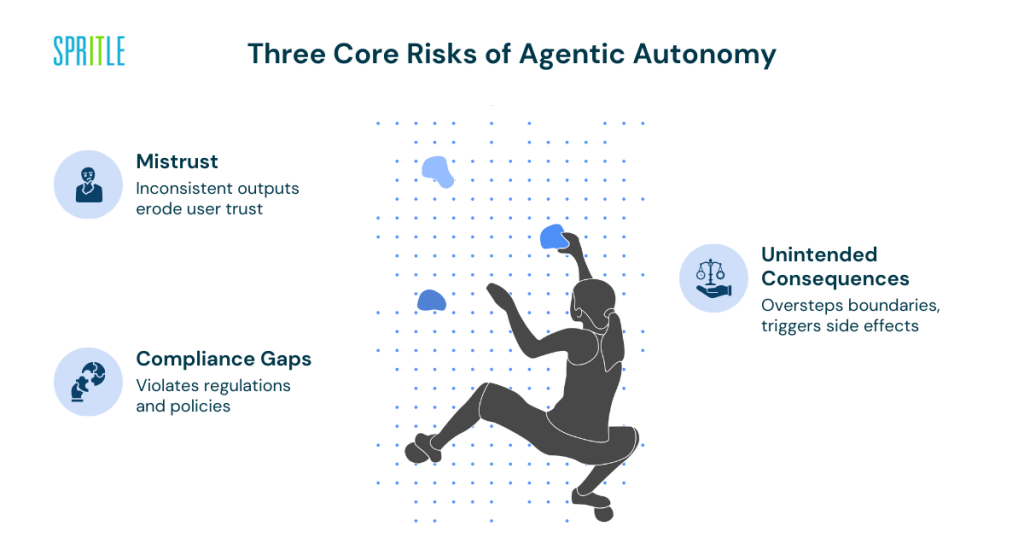

Three Core Risks of Agentic Autonomy

- Mistrust

Stakeholders may wonder: “Can I rely on what this agent does?”

If outputs are inconsistent, opaque, or incorrect, human users will resist adopting the system. - Compliance Gaps

Acting agents may violate laws, regulations, or internal policies—especially in sensitive domains like healthcare (HIPAA), privacy (GDPR), finance (SOX), security (SOC2), or industries with tight regulation (e.g. pharma, utilities). - Unintended Consequences

The agent might overstep boundaries—making changes it shouldn’t, triggering side effects, looping into an action chain you never intended.

In short: autonomy without oversight = risk, not innovation.

Why Trust & Guardrails Are Non-Negotiable

Think of an AI agent as an employee you’re onboarding:

- You don’t let them push to production on day one.

- You impose training, code reviews, permission levels, mentoring.

- You structure checks and balances.

An Agentic AI must be held to similar standards. Guardrails aren’t constraints—they are enablers. They help your agent scale responsibly, remain auditable, and earn the confidence of users, auditors, and regulators.

Key Guardrail Pillars for Agentic AI

| Pillar | Purpose | Example Controls |

| Validation / Verification | Prevent outputs or actions outside domain logic | Rule engines, domain sanity checks, cross-checks with trusted data |

| Access Control & Role Separation | Limit who can trigger or approve critical actions | RBAC (Role-Based Access Control), least privilege, segmentation |

| Approval & Human-in-the-Loop (HITL) | Add oversight to high-risk tasks | Workflow gates, manual sign-offs, review steps |

| Audit Logging & Traceability | Maintain a forensics trail for every decision | Stamped logs, decision rationale, change history |

| Fallback & Fail‐Safe Modes | Handle errors or anomalies gracefully | Pause on uncertainty, safe defaults, alert escalation |

| Transparency & Explainability | Make decisions interpretable & justifiable | Decision logs, explanation layers, schema of reasoning |

| Monitoring & Feedback Loops | Continuously check for drift, anomalies, and performance | Metrics, anomaly alerts, automated rollback |

When combined, these guardrails create a safety net that guides the system without stifling its effectiveness.

A Real-World Case: Agentic AI in Fintech Compliance (Spritle’s Experience)

To make this more tangible, here’s how we at Spritle applied these ideas for a fintech client.

Problem Statement

The client wanted to use an AI agent to automate compliance reporting (e.g. KYC analysis, transaction auditing, regulatory filings). The goal: eliminate manual toil and reduce latency in audit cycles.

What Went Wrong

The initial agent:

- Pulled incomplete or stale data

- Filed inaccurate reports

- Could not justify decisions (lack of traceability)

- Lacked oversight—no human checkpoint

- Triggered compliance violations

In short, autonomy without guardrails backfired.

How We Fixed It

- Validation Layer

Every output passed through domain-specific checks. Reports only moved forward if data consistency, thresholds, and sanity bounds were met. - Access Control

Only authorized roles (e.g. compliance analysts) could trigger or approve high-stakes actions like regulatory submissions. - Audit Logs & Rationale Capture

Each decision the agent made was logged, with rationale and links to original data sources. - Human Oversight / Final Approval

The agent’s output flowed into a user interface where compliance officers could review, correct, or reject before submission. - Feedback & Learning Loop

When humans adjusted or rejected outputs, those corrections fed into future runs, tightening the decision logic.

Outcome & Benefits

- Reports that used to take weeks were now generated in hours

- Submissions were compliant, passing regulatory review without red flags

- Analysts trusted the agent over time, reducing manual effort

- The system became auditable, reliable, and scalable

This underscores a core truth: Agentic AI only delivers value when built responsibly.

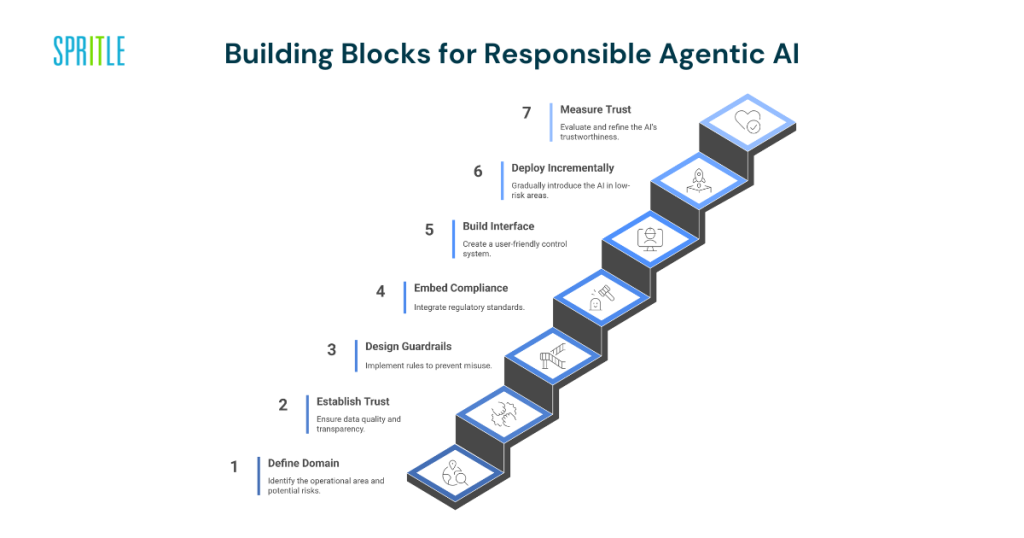

Building Blocks for Responsible Agentic AI

If you’re exploring adopting or designing Agentic AI in your organization, here’s a structured roadmap:

1. Define the Domain, Risk Profile & Use Cases

- What domain will your agent operate in (finance, healthcare, legal, supply chain, HR)?

- What decisions or actions will it perform—low risk vs high risk?

- What regulatory or compliance frameworks apply (GDPR, HIPAA, PCI-DSS, SOX, industry standards)?

- What kinds of failure or misuse could occur?

Having clarity here drives the rest of the design.

2. Establish Trust Foundations

- Data quality & provenance: ensure your data sources are clean, validated, versioned

- Transparency & explainability: embed mechanisms to trace decisions back to data and rules

- User feedback & override: allow users to correct agent behavior — especially early on

- Progressive rollout: launch in low-risk environments, gather trust and metrics before full expansion

3. Design Guardrails (the “Rules of the Road”)

- Validation / domain rules

- Access control & least privilege

- Approvals & human checkpoints

- Audit logs with rationale

- Fail-safes, fallback strategies

- Monitoring, drift detection, anomaly alerts

Design these guardrails as core modules, not afterthoughts.

4. Embed Compliance & Governance

- Map your system to regulatory frameworks (GDPR, HIPAA, etc.)

- Perform risk assessments, privacy impact analyses, security reviews

- Engage internal compliance, legal, audit teams from Day 0

- Conduct regular audits, red teaming, penetration testing

- Define retention, deletion, data usage policies

5. Build a Human-Centered Interface (HITL)

- Provide a “control cockpit” UI for oversight

- Offer explanations, uncertainties, confidence scores

- Allow human override, revisions, correction

- Capture user feedback as training signals

6. Deploy Incrementally & Monitor Continuously

- Start with narrow, lower-risk domains

- Iterate based on real usage and error cases

- Monitor metrics: error rates, overrides, deviations, audit exceptions

- Introduce automated rollback for anomalies

- Continuously refine logic, retrain models, tighten guardrails

7. Measure & Iterate on Trust

- Run user surveys for perceived trust

- Monitor adoption, override frequency, error feedback

- Hold periodic reviews with compliance, legal, and business stakeholders

- Use feedback to evolve both agent logic and guardrail sophistication

Why This Matters: The Bigger Picture

Scaling Agentic AI = Scaling Responsibility

The next wave of AI isn’t just about faster automation or smarter conversation—it’s autonomous action. Agentic AI can unlock new efficiency and scale, but only if users, regulators, and businesses trust it.

Without trust, organizations will never adopt it fully. Without governance, it will become a liability. Without accountability, it will fail audits and incite backlash.

Thus, the equation is:

Trust + Guardrails + Compliance = Sustainable & Scalable Agentic AI

Trust Builds Bridges

- Users feel confident to delegate tasks

- Business leaders feel safe adopting it

- Regulators and auditors see structured accountability

- The organization accelerates without exposure

Guardrails Do Not Limit — They Empower

Rather than stifle innovation, guardrails channel it, ensuring that agentic behavior stays aligned, auditable, and safe. They allow scope, not chaos.

Compliance Is Not Optional

In regulated industries, autonomous systems must operate within legal bounds. A non-compliant agent is a ticking time bomb—not a productivity booster.

Practical Tips for Leadership & Teams

If you’re a CIO, CTO, compliance head, or product leader exploring Agentic AI, here are pragmatic questions and frameworks to guide you:

- Scope risk by task class

- Which tasks are low-risk (e.g. scheduling, reporting)?

- Which are high-risk (e.g. finance transfers, legal filings)?

- Gate only the high-risk ones for automation first.

- Which tasks are low-risk (e.g. scheduling, reporting)?

- Adopt defense in depth

- No single guardrail is sufficient. Use overlapping controls (e.g. access + approval + validation).

- No single guardrail is sufficient. Use overlapping controls (e.g. access + approval + validation).

- Involve stakeholders early

- Compliance, legal, security, end users — bring them in during design, not at the end.

- Compliance, legal, security, end users — bring them in during design, not at the end.

- Explainability matters

- Decision rationale should be accessible in human form, not just black-box outputs.

- Decision rationale should be accessible in human form, not just black-box outputs.

- Govern via policy, not code alone

- Document policies and map them to code modules, so governance is traceable.

- Document policies and map them to code modules, so governance is traceable.

- Plan for incident response

- What happens when the agent misbehaves?

- Build alerts, circuit breakers, escalation, rollback paths.

- What happens when the agent misbehaves?

- Foster feedback culture

- Encourage users to override or flag mistakes. Use that as signal to improve the system.

- Encourage users to override or flag mistakes. Use that as signal to improve the system.

- Audit & test relentlessly

- Simulate adversarial or unexpected inputs (red teaming).

- Run periodic compliance checks.

- Review logs and decisions in audit cycles.

- Simulate adversarial or unexpected inputs (red teaming).

- Evolve the system

- Guardrails and policies should adapt as you learn.

- Use usage data to tighten logic, adjust thresholds, improve explainability.

- Guardrails and policies should adapt as you learn.

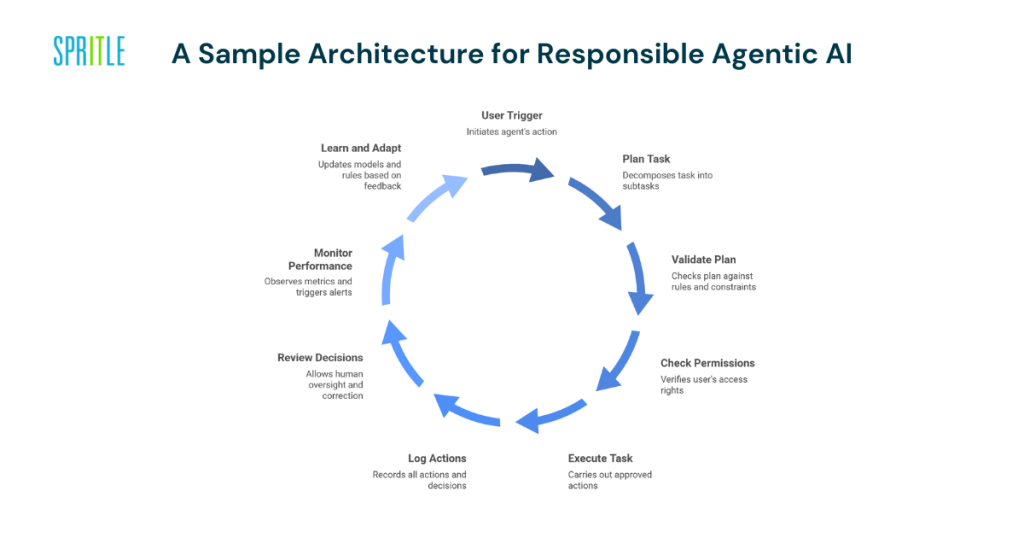

A Sample Architecture for Responsible Agentic AI

Here’s a high-level architecture sketch that illustrates how trust, guardrails, and compliance interlock around Agentic AI:

- User / Trigger Layer

User input, API call, event triggers agent’s initiation. - Planner / Intention Module

The agent decomposes the task into subtasks, maps workflows. - Validation & Policy Module

Before execution, each plan or decision is passed through rule engines, constraint checks, domain models, thresholds. - Access / Permission Module

Checks whether this user or role is allowed to trigger or approve this action. - Execution Module

Connects to downstream systems (CRM, ERP, databases, APIs) to carry out approved tasks. - Audit & Rationale Logger

Every action, decision, data source, and context is logged with metadata and reasoning. - Human Review Interface

Allows compliance officers, analysts, or users to review, override, or correct decisions. - Monitoring & Feedback Module

Observes metrics, anomalies, override rates; triggers alerts, rollbacks, or retraining. - Continuous Learning Loop

Corrections and user feedback feed into updates, adjustment of thresholds, retraining models, or refining rules.

This layered architecture ensures that Agentic AI operates within structured boundaries and remains transparent, auditable, and controllable.

Addressing Common Objections

“But guardrails will slow down the system.”

True, but that’s a trade-off you must make early. Start with stronger oversight in early phases; as confidence grows, you can relax checks selectively for lower-risk tasks.

“Explainability is impossible with deep models.”

You don’t need full white-box interpretability everywhere. Use hybrid systems: models + rules + symbolic layers. Capture decision reason metadata so humans can follow the logic path. Use post-hoc explainers selectively.

“Compliance frameworks differ per region—how do we handle that?”

Design your policy modules as region-aware. Use abstraction layers: rules can be plugged in per jurisdiction (e.g. GDPR vs CCPA vs EU). Embed geolocation, data residency, consent, and deletion logic in guardrail modules.

“What if users override too much and break trust?”

Track override behavior. If overrides consistently happen in a submodule, that’s a signal to flag, retrain, or adjust logic. Use that to refine agent behavior. Over time you should see override rates decline.

The Future of Agentic AI — Responsibly

Agentic AI has the potential to transform how organizations operate—making systems more autonomous, responsive, and scalable. But the future won’t belong to the boldest agent—it will belong to the trusted agent.

Key Future Trends

- Standards & Certification

Expect standards bodies to issue certifications for “safe Agentic AI” systems and auditors to probe decision logs. - Interoperable Governance Layers

Tools that plug in policy, audit, explainability modules will emerge (think “governance as a service”). - Composable Agents

Agents will be built from modular, reusable functions—with shared guardrail libraries (e.g. identity, privacy, compliance modules). - Collective Agentic Systems

Multiple agents interacting in ecosystems (e.g. supply chains, federated models) will force even stricter oversight and coordination. - Human-Agent Symbiosis

The most effective systems will leverage human judgment where it matters most—combining AI scale with human wisdom.

As that future arrives, organizations that adopt Agentic AI responsibly—embedding trust, guardrails, and compliance from the ground up—will lead.

Conclusion & Call to Action

Agentic AI doesn’t just respond—it acts. And that leap in capability amplifies both reward and risk.

To make Agentic AI an asset, not a liability, you must bake in:

- Guardrails (validation, access, review)

- Compliance (legal, security, privacy frameworks)

- Trust (explainability, auditability, fallback)

The formula is simple but nontrivial:

Trust + Guardrails = Sustainable Agentic AI

At Spritle Software, we partner with enterprises to architect Agentic AI systems that don’t just act—they act with control, compliance, and confidence.

If you’re ready to explore how your organization can safely deploy Agentic AI, let’s start that conversation.

📩 Reach out, and let’s build responsibly, together.