Imagine you’re leading an AI initiative at a mid-sized healthcare startup.

Your team has prototyped a patient-facing chatbot that helps summarize diagnoses and explain treatment options using a large language model. The demo went well — the investors are thrilled, and leadership wants it in production. But one week into “real-world testing,” you’re staring at a pile of misinterpreted inputs, privacy concerns, and angry clinicians saying the bot oversimplifies medical nuance.

Sound familiar?

Welcome to the wild world of fine-tuning foundation models — where the jump from demo to deployment is anything but trivial.

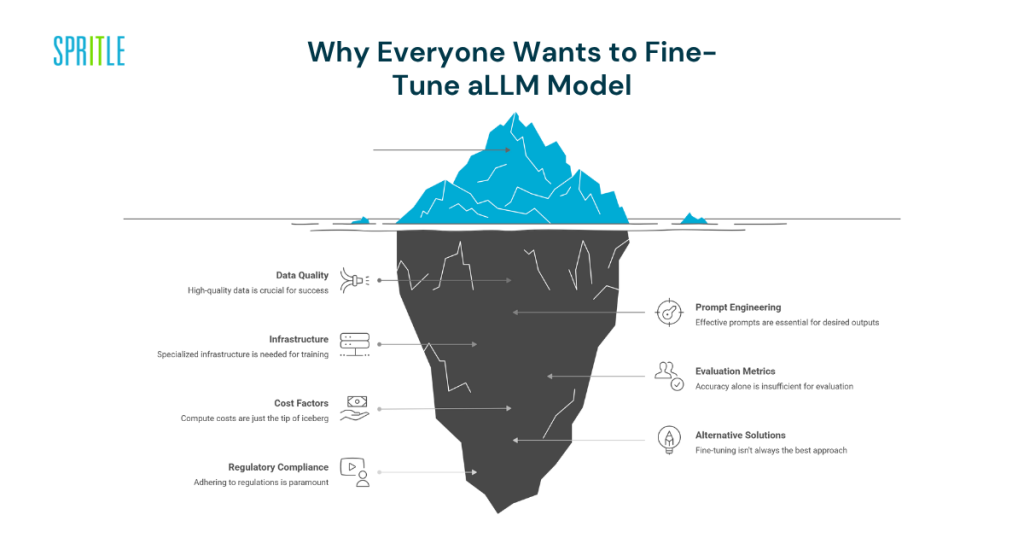

Why Everyone Wants to Fine-Tune (and Why It’s Risky)

With GPT, LLaMA, Claude, and open-source LLMs exploding in capability, it’s tempting to fine-tune one to fit your use case. It sounds logical: why rely on general-purpose outputs when you can tailor the model to your domain?

But here’s the uncomfortable truth: most teams underestimate what it really takes to fine-tune well — and the cost of getting it wrong.

Fine-tuning is no longer just about adding a few custom examples and hitting “train.” It’s an engineering-heavy, decision-packed journey with lots of sharp edges. And the real work starts well before you ever fire up a GPU.

1. Data Isn’t Just Fuel — It’s the Whole Engine

You can’t fine-tune a model well without task-specific, domain-relevant, and high-quality data. Period.

The Problem:

- Public datasets often don’t match your domain (e.g., legal, medical, fintech).

- Internal data is messy, inconsistent, or lacks good labels.

- You don’t just need input-output pairs — you need context, intent, and edge cases.

Real Example:

A fintech company tried fine-tuning an open-source model on 5K customer support tickets. The model started recommending actions that violated compliance rules — because the training data didn’t include regulatory edge cases or escalation paths.

Solution:

- Start with data audits: where is your data coming from, and what’s missing?

- Include failure examples, edge cases, and user feedback loops.

- If needed, build a manual annotation pipeline with SMEs.

Remember: garbage in, hallucinations out.

2. Prompt Engineering Isn’t a Silver Bullet

Before diving into fine-tuning, many teams try prompt engineering — and it works up to a point. You can get decent results by crafting clever instructions or using few-shot examples.

But there’s a ceiling.

Prompt engineering doesn’t:

- Fix hallucinations.

- Adapt over time.

- Handle task generalization across thousands of real-world variations.

When Fine-Tuning Actually Makes Sense:

- You need consistent tone, structure, and accuracy.

- You’re automating long-form outputs (e.g., medical summaries, legal briefs).

- Prompt tuning/RAG isn’t cutting it for UX or performance needs.

Fine-tuning helps move from a “language model” to a task-specific agent.

3. Infrastructure: This Isn’t Your Typical Dev Stack

Training even a 7B parameter model (e.g., Mistral, LLaMA-2) isn’t something you casually run on your MacBook.

You’ll Need:

- High-performance GPUs (A100s or H100s for serious jobs)

- Data pipelines for preprocessing and versioning

- MLOps tools for checkpointing, rollback, and model registry

- Monitoring dashboards for drift, latency, and behavior tracking

And don’t forget: fine-tuning isn’t a one-time job. You’ll likely retrain as new data, features, or compliance rules emerge.

4. Evaluation: Beyond Accuracy

How do you know your fine-tuned model is “better”?

Accuracy alone won’t cut it — especially in high-stakes domains.

What You Need:

- Custom benchmarks based on real-world inputs

- Manual review processes with human raters (e.g., “Does this summary preserve intent?”)

- Evaluation on:

- Factual correctness

- Tone

- Edge case handling

- Hallucination rate

- Toxicity / bias flags

- Factual correctness

Think like QA meets AI.

Pro Tip:

Don’t just use win rates vs GPT-4. Build your own gold standard dataset and review against it regularly.

5. Cost: More Than Just Compute

Fine-tuning costs go far beyond GPU time.

Hidden Costs:

- Data labeling ($$$ if you need domain experts)

- MLOps engineering (pipelines, monitoring, rollback infra)

- Compliance reviews

- Post-deployment debugging (AI bugs are opaque)

Even open-source models come with infra costs. Just loading and serving a 13B parameter model in production at low latency is its own beast.

So don’t ask “What’s the cost of fine-tuning?” — ask “What’s the total cost of ownership over 12 months?”

6. When Fine-Tuning is the Wrong Tool

Here’s a hard truth: most use cases don’t need full model fine-tuning.

Better Options May Include:

- Adapters (LoRA, QLoRA): lightweight, cheaper

- RAG (Retrieval-Augmented Generation): keeps your data out of model weights

- Custom system prompts + function calling

Rule of Thumb:

Fine-tune only when:

- Your use case demands deep task-specific behavior.

- You can’t solve it with smarter prompt + context design.

7. Regulatory & Safety: Not Optional

If you’re in healthcare, legal, finance, or education — fine-tuning isn’t just technical. It’s regulatory.

Common Gaps:

- No audit trail for model outputs

- Training on PII without consent

- Outputs violating compliance policies

Must-Haves:

- Red teaming and adversarial testing

- Role-based access control

- Model versioning & logging

- Documentation for AI Act, GDPR, or HIPAA depending on region

And always keep a human in the loop when consequences matter.

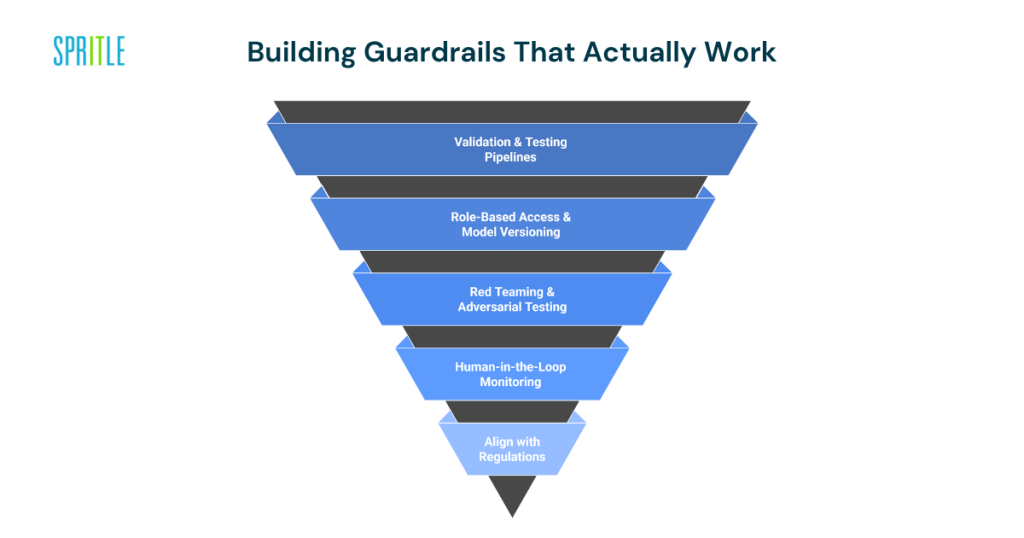

🛡️ Building Guardrails That Actually Work

Fine-tuning a foundation model without guardrails is like deploying code without testing — it’s a recipe for failure.

When LLMs are exposed to real users and real data, even the most accurate model can go off-course. Guardrails aren’t just nice to have — they’re essential to mitigate risk, especially in regulated domains like healthcare, finance, or legal.

Here’s how to build real-world guardrails into your fine-tuning pipeline:

✅ Validation & Testing Pipelines

Set up automated tests for:

- Factual accuracy

- Toxicity and bias detection

- Edge case behavior

- Hallucination triggers

Treat this like a CI/CD pipeline for AI: run tests on every new fine-tune checkpoint before pushing to production.

✅ Role-Based Access & Model Versioning

Only authorized users should be able to access, edit, or deploy tuned models. Maintain clear version history, audit logs, and rollback paths in case something breaks or violates compliance.

✅ Red Teaming & Adversarial Testing

Intentionally stress-test your model with prompts designed to trick or exploit it. Involve domain experts to craft adversarial examples (e.g., ambiguous legal questions, misleading health terms).

✅ Human-in-the-Loop Monitoring

No guardrail system is complete without human oversight. Build dashboards for real-time feedback, alerts for high-risk responses, and escalation paths for manual review.

✅ Align with Regulations

Guardrails are how you stay compliant. Build around frameworks like:

- HIPAA (for healthcare)

- GDPR (for EU users)

- SOC2 / ISO (for enterprise systems)

Don’t just ship faster. Ship safely, and with control.

Metaphor: It’s Like Hiring a Junior Developer

Fine-tuning a model is like onboarding a junior developer:

- You need to give it good documentation (training data)

- Set up guardrails (validation, tests, approvals)

- Monitor what it produces

- Retrain or course-correct when it misunderstands something

You wouldn’t let a new hire ship to prod without review — don’t let your fine-tuned model do it either.

Case Study: Healthcare Startup’s AI Assistant

A Series a healthtech startup wanted to launch an LLM-powered assistant to help patients understand discharge summaries.

Their Plan:

- Fine-tune a small open-source model on 10K anonymized discharge summaries.

- Deploy it in their patient app for real-time explanations.

Their Problems:

- Data inconsistencies: Some notes were written in shorthand, others in full prose.

- No ground truth: It wasn’t clear what a “correct” summary looked like.

- Evaluation was fuzzy: Medical staff disagreed on ideal outputs.

- After launch: The model confused medication dosage 3 times. Legal flagged it.

Their Fix:

- Switched to RAG with vector search + templated summaries.

- Introduced human review for high-risk content.

- Used LoRA fine-tuning for tone/style, not facts.

Result: Safer, faster, and easier to iterate on — with doctors still in control.

5 Key Questions Before You Fine-Tune

- What exact behavior do we want to teach the model?

- Can we get high-quality examples for that behavior?

- Do we have the infra to train, serve, and monitor this model?

- How will we evaluate success — beyond accuracy?

- Are we better off using prompt tuning or RAG for this case?

If you don’t have strong answers to all 5, pause the fine-tuning plan.

Looking Ahead: What Will Change in 2025+

Fine-tuning isn’t going away — but how teams do it will evolve.

Expect to See:

- More modular tuning (e.g., fine-tuning tone, not tasks)

- Rise of domain-specific model hubs (e.g., FinLLM, MedLLM)

- Tight RAG + lightweight tuning combos

- End-to-end AI DevOps pipelines (automated eval, rollback, A/B testing)

And most importantly, more non-AI teams learning how to supervise AI workflows responsibly.

TL;DR: Fine-Tuning Isn’t Hard. Doing It Right Is.

It’s easy to spin up a Colab and start training. It’s much harder to:

- Define the right task

- Source trustworthy data

- Build reliable infra

- Evaluate meaningfully

- Stay compliant

- Avoid model drift

- Justify the cost

But when you get it right? You don’t just have a smarter model — you’ve built a durable capability. One that lets you launch new features faster, adapt to c customer needs, and keep human creativity at the center of AI workflows.

Because the goal isn’t just to ship a fine-tuned model — it’s to make AI work in the messy, high-stakes real world.