How autonomous AI is reshaping cybersecurity — and why it’s not about replacing humans, but giving them the upper hand.

Hey, I’m Sri Kumar – a DevOps Engineer.

And if you work in cybersecurity or DevOps, especially in AI-driven teams, you’ll want to hear this.

It’s 3:08 PM on a regular weekday.

A new deployment just went live — business as usual for Siva KB, Director of AI at Spritle Software ,a fast-scaling AI SaaS company. His team pushes new code constantly. Product velocity is high. Users are delighted.

A frontend developer is rushing to ship a new payment dashboard feature. She needs a date manipulation library and quickly adds moment.js to her project.

But here’s what she doesn’t know: that specific version of moment.js has a ReDoS vulnerability (CVE-2022-31129) that could crash her application with a malicious date string.

And yet… no alert storm.

No Slack meltdown.

No incident postmortem panic.

Why?

Because while the team grabbed coffee,

-The agent scans the package.json diff

-Cross-references moment@2.24.0 against the National Vulnerability Database

-Identifies CVE-2022-31129 with CVSS score 7.5 (High)–Opens a pull request with an updated version and migration notes

Before anyone noticed. This isn’t a futuristic fantasy. It’s already happening.

And it’s quietly reshaping the way modern security teams work.

Why Human-Only Security Doesn’t Scale Anymore

Today’s software ecosystem is complex:

- Distributed systems

- API-heavy backends

- Hundreds of microservices

- Thousands of code changes and deployments a month

Security teams are expected to keep up with all of it.

Traditional methods like:

- Manual reviews

- Scheduled vulnerability scans

- Alert triage queues

- Patch sprints

…simply can’t scale fast enough anymore.

Even the most experienced DevSecOps teams are constantly playing catch-up — not due to lack of skill, but the sheer volume and velocity of modern software.

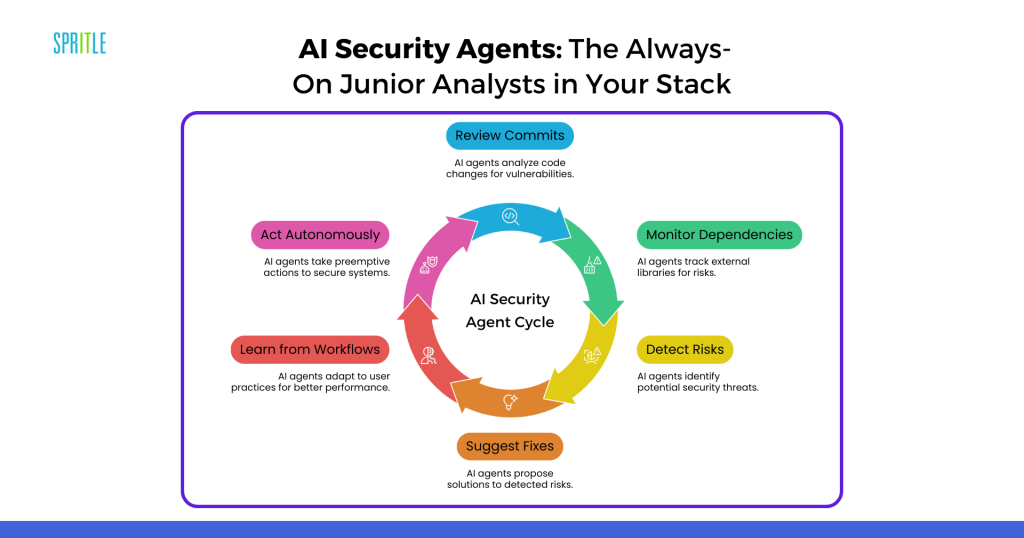

Enter AI Security Agents

AI security agents are not replacements.

They’re force multipliers.

Picture them as tireless junior analysts who:

- Review every commit

- Monitor every dependency

- Detect infrastructure-as-code risks

- Catch misconfigurations instantly

- Suggest fixes automatically

They never take breaks.

They learn from your workflows.

And sometimes, they act before any human ever logs in.

This isn’t theoretical. We’re already seeing:

- GitHub Copilot can suggest more secure coding patterns and alternatives when you’re writing code.

For example, if you start typing a SQL query with string concatenation, it might suggest parameterized queries instead. However, it doesn’t actively flag existing insecure code in real-time – it’s more about steering you toward better practices as you write.

- Microsoft Security Copilot acts as an AI assistant that helps security analysts by summarizing threat intelligence, explaining attack patterns, and providing context about security alerts.

It can help analysts understand what they’re looking at faster, but it doesn’t automatically generate patches or take remediation actions – it’s focused on analysis and explanation.

- Internal red-teaming tools powered by AI in enterprise environments

But Let’s Be Real — It’s Not Plug-and-Play

This is where many teams stumble.

The AI tools are powerful — but integration into your stack isn’t magic.

What it takes to succeed:

- Context-aware setup — an e-commerce app has different risks than a healthcare platform. The AI must know what matters to your business.

- CI/CD integration — alerts should reach developers where they already work (e.g., GitHub, GitLab, CI pipelines).

- Governance controls — no one wants bots auto-merging insecure fixes.

- Feedback loops — your team’s judgment should refine what the AI learns.

In short:

Don’t “install and forget.”

Onboard and collaborate.

How Siva’s Team Did It Right

Let’s go back to Siva’s AI engineering team.

Their rollout was strategic:

- Listen-only mode: the agent observed behaviour, flagged issues, and remained passive.

- Sandbox simulation: it proposed fixes in a non-production environment.

- Human-in-the-loop: developers reviewed, corrected, or approved actions.

Within weeks, the benefits became clear:

- 60% of low-to-medium vulnerabilities were caught before reaching the security team.

- Time-to-patch improved dramatically.

- Engineers saw fewer repetitive tasks and had more time for big-picture thinking.

Security became predictable — not panicked.

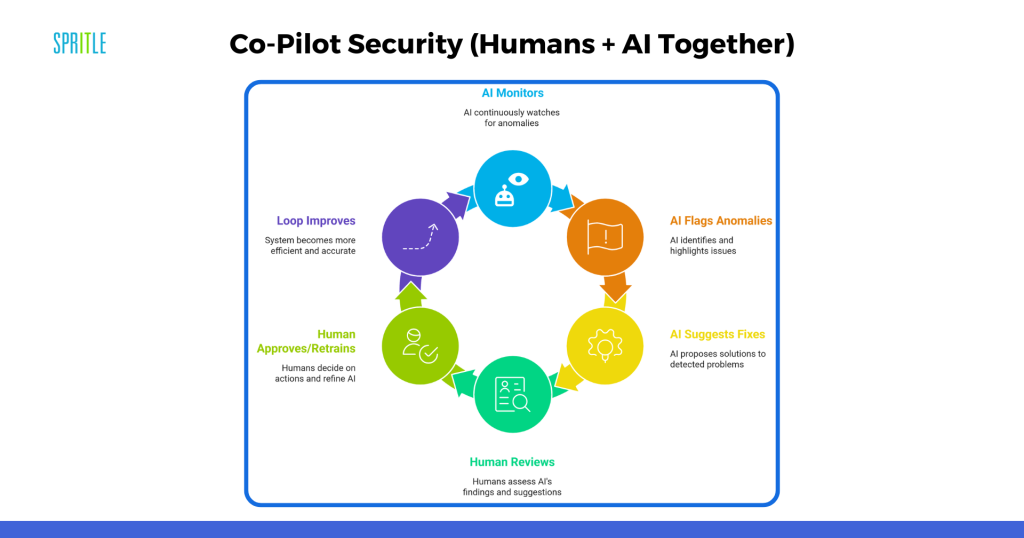

Co-Pilot Security: Humans + AI Together

AI agents won’t replace teams.

They’ll elevate them.

The model is simple:

- AI constantly monitors, flags anomalies, and suggests fixes

- Humans step in to review, approve, or retrain

- Together, the loop gets smarter — and faster

Think of it like autopilot in an airplane.

It handles turbulence. Alerts the pilot.

But the pilot still flies the plane.

The Gap Between Demos and Reality

Slick product demos show AI doing amazing things:

- Code commit → Issue flagged → Fix suggested → PR raised

But real teams know better.

Here’s what demos skip:

- Legacy codebases with outdated dependencies

- Edge cases no model has seen before

- Dev teams juggling multiple languages and frameworks

- Business-specific security exceptions that aren’t in the docs

This is why off-the-shelf AI agents often need deep customization.

What Smart Security Looks Like

Let’s imagine a better scenario:

- It’s Friday evening. A deployment goes live.

- An AI agent watches silently, detects a high-risk vulnerability, and opens a PR.

- By Monday, the code is already patched — no incidents, no fire drills.

- Developers come in to a helpful PR, not an angry alert.

- Security leads get time to plan, not just react.

That’s code-speed security in action.

A Vision Worth Building

This isn’t about hype.

It’s about getting ahead of risk, not chasing it.

AI agents won’t do your job for you — but they’ll give you the tools, speed, and space to do it better.

✅ Time to think

✅ Time to lead

✅ Time to build systems — not just fix them

FAQs

1. Is AI security only for large enterprises?

No. Lightweight tools now serve startups and mid-size teams affordably.

2. What if the AI flags the wrong issue?

You control the loop. Humans always approve before production.

3. How is this different from a regular scanner?

Scanners alert. AI agents act — they write the patch or suggest PRs.

4. Can it work with legacy codebases?

Yes — but it needs fine-tuning to handle inconsistencies.

5. Will it reduce alert fatigue?

Absolutely. It filters noise and raises only what matters.

The Future of DevSecOps Is Smarter, Not Harder

How Do You Know If You’re Ready?

Ask yourself:

- Are we firefighting daily?

- Are our tickets piling up?

- Are low-level bugs burning our time?

If yes, it’s time to consider AI co-pilot security — a smarter way to scale your efforts without burning out.

How Can You Build Trust in AI Security Agents?

- Start small and test in sandbox mode

- Measure impact with real metrics

- Continuously refine with feedback

- Design for edge cases and fallbacks

Always keep humans in the loop

Final Take : Your Smartest Security Teammate Might Be AI

Tired of alerts, rushed patches, and burnout?

AI co-pilots flip that script — catching issues early, suggesting safe fixes, and giving your team time to focus on what truly matters: building.

The best teams aren’t afraid of AI — they train it, test it, and team up with it.

✅ Detect early

✅ Reduce noise

✅ Suggest smart fixes

✅ Scale with your stack

✅ Keep your engineers sane

Our expert’s way: We help teams make AI security agents work for their stack — by tuning, testing, and integrating them where it actually matters.